People in the Times of the Red Queen Effect

Unfolding the AI Narrative. Part 1.5

Welcome to the Tales of a Cyberscout, where we explore topics ranging from active cyber defence and detection engineering, to technology and society, all of it with a drizzle of zesty cynicism and philosophical gardening.

Aperitiff

This article started originally as the Aperitiff for Part 2 of the Unfolding the AI Narrative series but it quickly became it’s own spinoff short. That’s why I’m calling it Part 1.5. I hope you enjoy it fellow cyberscout.

Evolution or Delusion?

It has been a few interesting weeks for Shoggoth and the world of IT. We now know that people have hobbies like watching AI slowly drive Microsoft employees insane, and it would seem that GenAI doesn't think but rather projects an illusion of thinking. And here I was, believing I was interacting with a sentient intelligence with a chat interface.

Le sigh... Thanks for destroying that Apple.

Oh but perhaps I don't need AI to think? I only need it to need me. Ain't nobody have time to build true friendship and relationships right? This is the phenomenon described by Rob Horning as companionship without companions. Best for me to share his thoughts on the matter:

Clearly tech companies assume that chatting with objects and compelling them to explain themselves is something everyone has been longing for, hoping to at last reduce their dependency on social contact. Many anticipated AI applications seem predicated on the idea that our experience of the world should require less thought and have better interfaces, that we want to consume only the shape and form of conversation, consume simulations of speaking and listening without having to risk direct engagement with other people...

Consumerism is loneliness; it figures other people as a form of inconvenience and individualized consumption as the height of self-realization.

Guess what, a lot of social media platforms only see you as a commodity, a fertile field to farm for attention. This relentless pursuit of engagement, however, often reduces complex human interactions to a series of quantifiable metrics, stripping away the nuance and vulnerability essential for genuine connection. But the human garden of emotions cannot be subjected to the hyper-efficiency paradigm.

Sorry (not sorry) ai-hype tribe.

Recent research is telling us that using AI as a drop-in replacement for a therapist is not safe. Hear what Kevin Klyman has to say:

In the middle of this meaning crisis, we are tormented by the Red Queen Fallacy, and we employ proxy goals as replacement for meaningful progress in the shape of efficiency gains driven by AI.

This begs an obvious question: are you simply running on a treadmill or making progress towards meaningful outcomes?

The Red Queen Effect goes deeper than this though. It is a phenomenon of evolutionary pressures. You have no choice but to adopt the new tools that make your product and operations more efficient. To keep up with the competition, you are forced to implement optimization artefacts that help you do more with less.

As a competitor adapts they gain efficiency, speed and value which creates pressure on all others to adapt. This pressure mounts as more competitors adapt until all are eventually forced to change. It’s why guns replaced spears or electric lamps replaced gas lamps. (What is conversational programming? by Simon Wardley)

But why? —> Jevons Paradox.

Enhancements in efficiency often lead to greater consumption, as newfound capabilities unlock previously inaccessible value streams. Because production of a certain good or service becomes cheaper, and the entry-barrier lower, more people flock to the new exploitable niche.

Under certain conditions, the demand (and not just the supply) for that product increases in proportion. Think of how efficient printing made books cheaper, which led to more books being printed and consumed globally, rather than less paper being used overall.

So with higher efficiency from AI, will I need less engineers? No! To mine the “ore” of new unlocked value, you are forced to reallocate spending to the new stream. Data Scientist ads rose exponentially. The rise of AI drives adjacent domain growth too: all engineers -regardless of AI being your core discipline- have to evolve to understand and use the new technologies at hand. You won’t need less engineers, you will need more, because of two factors: complexity and growth.

Why growth? Because even with efficiency gains you will still want to grow as a business right? Efficiency gains will only ensure you are running at the same pace as the competition. But a business doesn’t just want to keep up, it wants to grow. And growth in the AI revolution comes with greater complexity.

This cycle of efficiency leading to greater consumption and complexity isn't new. We saw a similar pattern with the rise of container orchestration. Remember the boom of Kubernetes?

When Kubernetes was born you could suddenly do magical things like run a self-healing cluster of containers that could scale up and down with minimal friction. The efficiency gains over regular phyiscal or virtual servers that had to be manually wired and scaled alongside outage windows were astronomical. But Kubernetes is not simple to understand and maintain (at least not in the pre-AI era). The complexity of the tool required businesses to hire entire teams of DevOps engineers just to maintain regular operations.

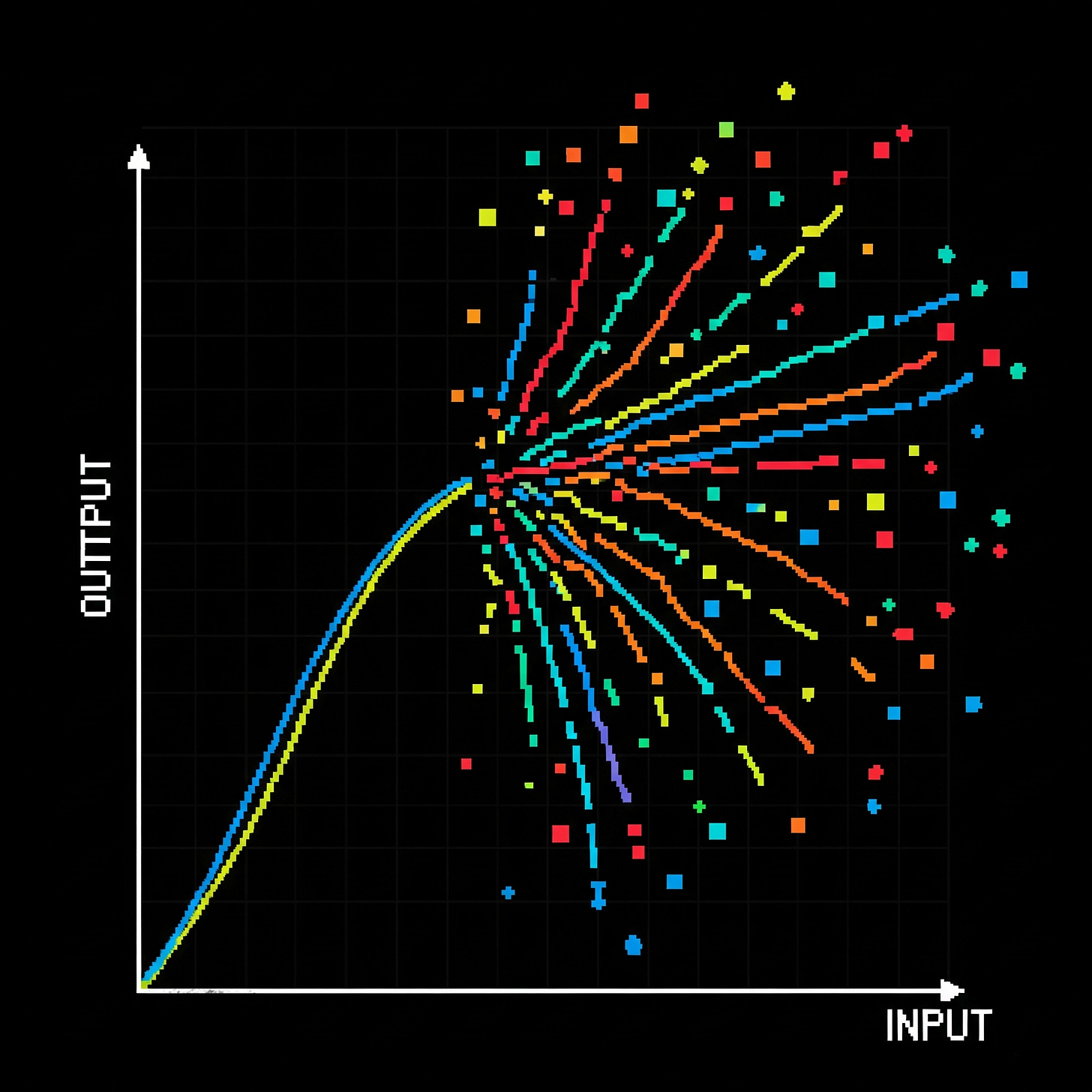

The server admin didn’t disappear, it became a DevOps engineer. Higher complexity means higher energy required to avert the threat of entropy disrupting the predictability of your operations. Efficiency gains don’t translate linearly to lower complexity. See Jevons Paradox, Rebound Effect and Tesler's Law of Conservation of Complexity.

Tesler states that every application has an inherent amount of complexity that cannot be removed. When you simplify a process for the user (an efficiency gain for them), the complexity is simply moved elsewhere, usually into the software's code or the backend system.

Think about the level of complexity and energy consumption required to generate large language models, of which general users only know the shallow aspect of a flat chat interface. The API that allows people to interact with the technology makes it look simple, but is it?

I wrote profusely about this topic as it relates to cyber threat intelligence in The Uncertainty of Intelligence and the Entropy of Threats.

Increased Production != Better Decisions

Just because you can do something faster or more efficiently doesn’t mean that increased output necessarily translates to increased value or improved quality.

If you are pouring huge investments in AI-driven solutions for your business problems, how are you measuring that more/faster truly translates to better?

You know what is the elephant in the room? —> Decision-making. Orientation. Strategy.

The question as to whether we are only shifting the bottleneck from one of production to one of judgement (decision) doesn’t have a seat at the table in ai-hype dinners.

AI is way better at accelerating production, but not necessarily always better at accelerating judgement. It depends on the use-case, and the complexity of what you are trying to achieve. You can vibe-code an app in a day, and ask clients to give you their PII when they sign up, but is your app secure?

Be real.

Sorry to break these news to you but: your operational pipeline will always, at some point, include a human-in-the-loop. Is this human equipped with the right training, insight and tools to make decisions at the new efficiency curve speed?

Imagine you buy a new car than can go from 0-100 km/h in less than 1s and achieve speeds of 1000 km/h due to 10x efficiency gains in car components (engine, chasis, materials, etc.), it probably means you can now commute to work faster but it also increases the probability of a car crash exponentially (all other conditions remaining equal, like road infrastructure).

As long as you are the driver, to avoid collisions with this new hyper-speed car, your reaction and decision-making speed also needs to grow exponentially.

We addressed this topic in an earlier post, decision matters because it represents an optionality token:

decision-making is the act of transforming available information into actions that generate the conditions for more decisions to be made…

The whole point of making a decision is to give you the option to make more decisions in the future. You don’t want to end up in a checkmate situation, with no further moves to make. This is the equivalent of having your entire network encrypted by ransomware with no backups…

If efficiency gains driven by AI increase the output of a certain unit of work or product, but you don’t invest time in creating new decision-making frameworks that provide direction to the new velocity of production, how do you know whether it’s inertia or intentional strategy driving things forward?

There is an unavoidable human-scale that hype-bros discourse leaves behind, the scale at which meaning is produced. Because decision-making requires judgement and judgement is deeply entangled with discernment, a certain attunement to the nuances of the lived operational environment and a necessary human-time where wisdom lives.

That word. Wisdom. We seem to have forgotten about it. I still remember that phrase from the book “Siddhartha” by Herman Hesse:

Knowledge can be communicated, but not wisdom. One can find it, live it, do wonders through it, but one cannot communicate and teach it.

Guess what? RAG is not a drop-in replacement for the type of human-acquired wisdom that makes an organization precisely what it is: organized people. And people exist within a cultural landscape. It is people who make and break culture. It’s culture that brings people together, the glue uniting them behind a shared purpose.

Wisdom is key to this process, a reservoir of lived experience attuned to the speed of human time and the codes of the human interface. It resists capture by stochastic machines (ML models) and metrizable data points.

There is a better word to describe this subtle interaction of organization, people, culture and wisdom: Lore. Ventakesh Rao has a beautiful series on this topic. From Epic vs Lore:

What makes lore important is that it’s what persists through epic ages and dark ages, through booms and busts, through iconic era-defining product seasons and incremental update seasons that merely keep the product alive and chugging along. Lore creates slow-burn meaning in a way that isn’t subject to the vagaries of epic winds.

And this is what’s happening right now all around us, the ai-hype is a generator of incredible Epics, flourishing futures driven by endless optimization gains with no tradeoff.

The question is: what role are you going to play in that Epic? Be smart. Listen. What is shared Lore telling you?

You can’t RAG your way around meaning, and meaning is produced in community, in relation to others around you. You cannot generate meaning faster, you cannot optimize it. It has its own tempo. To the meaning-making process of Lore, you matter. In other words, as the foxwizard puts it: your influence is not zero.

I suggest you give that article a go, if I had to recommend someone to follow, it would be the foxwizard. There is an aliveness to his writing that brings about hidden layers of our human life, the ones that the treadmill likes to silence.

Unfortunately, with some exceptions, most written content nowadays has the smell of punchy, short, catchy, polished words with no soul. Full AI-generated articles that want to pass for original thinking. Look for anything other than that. Look for hybrid-thinking that encodes the art of slow reflection, even when accompanied by AI.

There is some sort of magic that still lives in the word that is born out of deep reflection, an intimacy of thought and a depth of colour that help nourish collective meaning-making around a shared fire.

It is at least something I want to orient towards.

See you guys soon, Part 2 of the Unfolding the AI Narrative is ready.

Stay tunned, stay fresh, stay antimemetic.